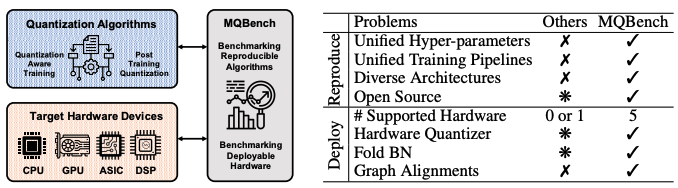

MQBench is a benchmark and framework for evaluating the quantization algorithms under real world hardware deployments. Integrated with the latest features of Pytorch, MQBench can automated trace a full precision model and convert it to quantized model. It provides numerous hardware & algorithms for researchers to benchmark the deployability and reproducibility for quantization. We open source the MQBench library to facilitate the community.

Reproducibility: MQBench unifies the training hyper-parameters and compare different algorithms fairly.

Deployability: MQBench sumarizes the quantization schemes of 5 deep learning acceleraters and align the quantization point by a flexible toolkit.

These two points are always nelegected by previous works. More detailed infomation see our benchmark paper, toolkit and documentation.

MQBench is flexible to add support for new hardware or quantization algorithms. WELCOME to contribute and submit new results following the README instruction.

@article{2021MQBench, title={MQBench: Towards Reproducible and Deployable Model Quantization Benchmark}, author={Yuhang Li* and Mingzhu Shen* and Jian Ma* and Yan Ren* and Mingxin Zhao* and Qi Zhang* and Ruihao Gong and Fengwei Yu and Junjie Yan}, journal={https://openreview.net/forum?id=TUplOmF8DsM}, year={2021} }

Toolchain Team

Sensetime Research

Wechat Group Helper

QQ Group